Sponsored

Building Large Language Models from Scratch - by Dilyan Grigorov (Paperback)

Pre-order

Sponsored

About this item

Highlights

- This book is a complete, hands-on guide to designing, training, and deploying your own Large Language Models (LLMs)--from the foundations of tokenization to the advanced stages of fine-tuning and reinforcement learning.

- About the Author: Dilyan Grigorov is a software developer with a passion for Python software development, generative deep learning & machine learning, data structures, and algorithms.

- Computers + Internet, Intelligence (AI) & Semantics

Description

Book Synopsis

This book is a complete, hands-on guide to designing, training, and deploying your own Large Language Models (LLMs)--from the foundations of tokenization to the advanced stages of fine-tuning and reinforcement learning. Written for developers, data scientists, and AI practitioners, it bridges core principles and state-of-the-art techniques, offering a rare, transparent look at how modern transformers truly work beneath the surface. Starting from the essentials, you'll learn how to set up your environment with Python and PyTorch, manage datasets, and implement critical fundamentals such as tensors, embeddings, and gradient descent. You'll then progress through the architectural heart of modern models, covering RMS normalization, rotary positional embeddings (RoPE), scaled dot-product attention, Grouped Query Attention (GQA), Mixture of Experts (MoE), and SwiGLU activations, each explored in depth and built step by step in code. As you advance, the book introduces custom CUDA kernel integration, teaching you how to optimize key components for speed and memory efficiency at the GPU level--an essential skill for scaling real-world LLMs. You'll also gain mastery over the phases of training that define today's leading models:- Pretraining - Building general linguistic and semantic understanding. Midtraining - Expanding domain-specific capabilities and adaptability. Supervised Fine-Tuning (SFT) - Aligning behavior with curated, task-driven data. Reinforcement Learning from Human Feedback (RLHF) - Refining responses through reward-based optimization for human alignment.

- How to configure and optimize your development environment using PyTorch The mechanics of tokenization, embeddings, normalization, and attention mechanisms. How to implement transformer components like RMSNorm, RoPE, GQA, MoE, and SwiGLU from scratch. How to integrate custom CUDA kernels to accelerate transformer computations. The full LLM training pipeline: pretraining, midtraining, supervised fine-tuning, and RLHF. Techniques for dataset preparation, deduplication, model debugging, and GPU memory management. How to train, evaluate, and deploy a complete GPT-like architecture for real-world tasks.

From the Back Cover

This book is a complete, hands-on guide to designing, training, and deploying your own Large Language Models (LLMs)--from the foundations of tokenization to the advanced stages of fine-tuning and reinforcement learning. Written for developers, data scientists, and AI practitioners, it bridges core principles and state-of-the-art techniques, offering a rare, transparent look at how modern transformers truly work beneath the surface. Starting from the essentials, you'll learn how to set up your environment with Python and PyTorch, manage datasets, and implement critical fundamentals such as tensors, embeddings, and gradient descent. You'll then progress through the architectural heart of modern models, covering RMS normalization, rotary positional embeddings (RoPE), scaled dot-product attention, Grouped Query Attention (GQA), Mixture of Experts (MoE), and SwiGLU activations, each explored in depth and built step by step in code. As you advance, the book introduces custom CUDA kernel integration, teaching you how to optimize key components for speed and memory efficiency at the GPU level--an essential skill for scaling real-world LLMs. You'll also gain mastery over the phases of training that define today's leading models:- Pretraining - Building general linguistic and semantic understanding. Midtraining - Expanding domain-specific capabilities and adaptability. Supervised Fine-Tuning (SFT) - Aligning behavior with curated, task-driven data. Reinforcement Learning from Human Feedback (RLHF) - Refining responses through reward-based optimization for human alignment.

- How to configure and optimize your development environment using PyTorch The mechanics of tokenization, embeddings, normalization, and attention mechanisms. How to implement transformer components like RMSNorm, RoPE, GQA, MoE, and SwiGLU from scratch. How to integrate custom CUDA kernels to accelerate transformer computations. The full LLM training pipeline: pretraining, midtraining, supervised fine-tuning, and RLHF. Techniques for dataset preparation, deduplication, model debugging, and GPU memory management. How to train, evaluate, and deploy a complete GPT-like architecture for real-world tasks.

About the Author

Dilyan Grigorov is a software developer with a passion for Python software development, generative deep learning & machine learning, data structures, and algorithms. He is an advocate for open source and the Python language itself. He has 16 years of industry experience programming in Python and has spent 5 of those years researching and testing Generative AI solutions. His passion for them stems from his background as an SEO specialist dealing with search engine algorithms daily. He enjoys engaging with the software community, often giving talks at local meetups and larger conferences. In his spare time, he enjoys reading books, hiking in the mountains, taking long walks, playing with his son, and playing the piano.

Shipping details

Return details

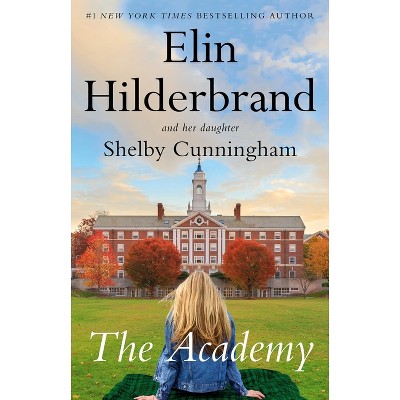

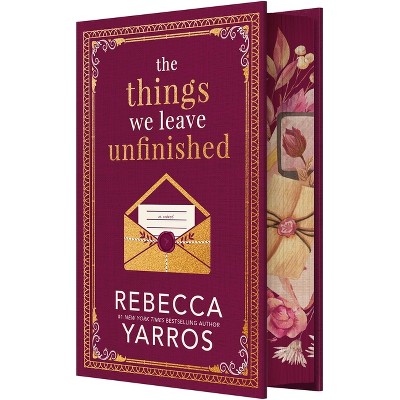

Trending Book Pre-Orders

Discover more options